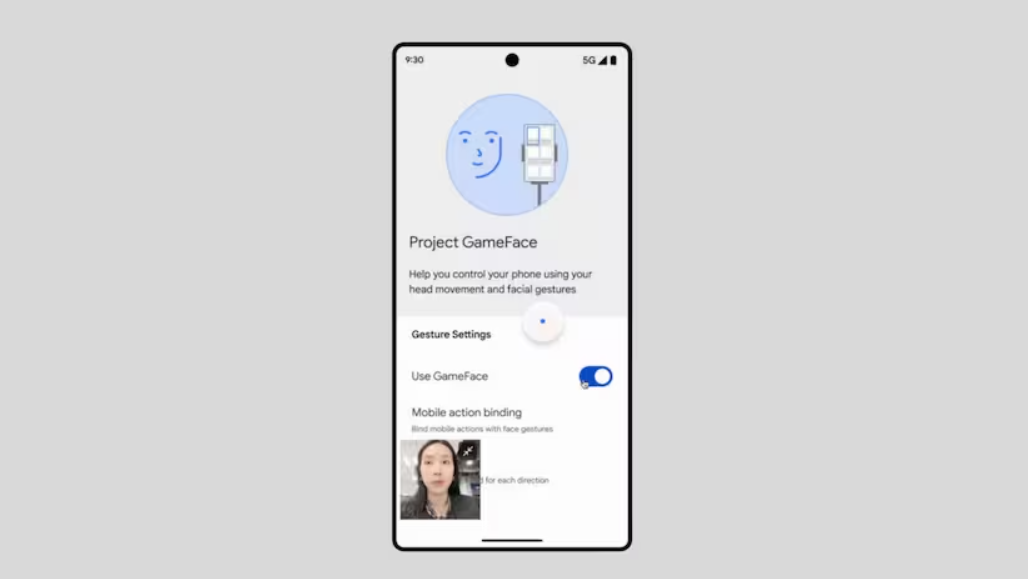

Earlier this month, Google unveiled Project Gameface, which is now expanding to Android. This open-source innovation introduces a hands-free gaming mouse designed to empower differently abled individuals by enabling them to control computer cursors through facial gestures and head movements.

Initially tailored for PCs, this technology is now making its way to the Android ecosystem, as announced during Google’s annual developers-focused conference, Google I/O. But what exactly is Project Gameface and how does it function? Let’s delve into the details.

What is Project Gameface?

On May 10, Google introduced Project Gameface, an open-source code empowering developers to craft software for a hands-free gaming mouse. This breakthrough utilizes head tracking and facial gesture recognition to navigate computer cursors. Inspired by the story of Lance Carr, a quadriplegic video game streamer battling muscular dystrophy, Google embarked on this journey to provide additional means of device operation to differently abled individuals, while ensuring cost-effectiveness for wider accessibility.

How Project Gameface Works:

Through this open-source code, Google equips developers with the tools to track facial expressions and head movements, utilizing either the device’s camera or an external one. These gestures and movements are then translated into intuitive and personalized controls.

Leveraging the Project Gameface code, developers can offer users tailored configurations, including personalized facial expressions, gesture sizes, cursor speeds, and more. Google has collaborated with Incluzza, a social enterprise in India, to explore expanding the technology beyond gaming, with plans to incorporate additional abilities such as typing.

Project Gameface on Android:

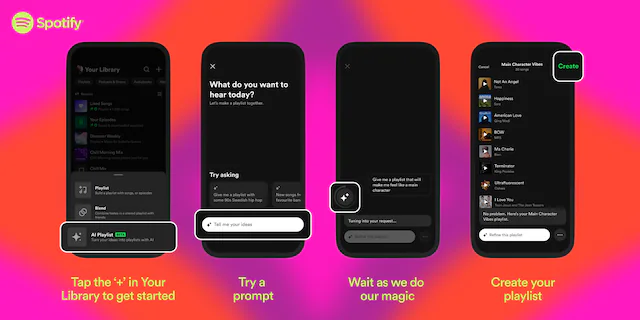

During its developers’ conference on May 14, Google announced the expansion of Project Gameface compatibility to Android devices. By implementing a virtual cursor within the Android operating system (OS) through the Android accessibility service, Google aims to extend the technology’s reach.

Leveraging MediaPipe’s Face Landmarks Detection API, which comprehends 52 facial gestures, Google enables control over a myriad of functions. This approach also grants developers the flexibility to set different thresholds for recognizing each expression, thus enhancing customization options.

Project Gameface: Availability:

The open-source code for Project Gameface is now accessible on Google’s Github repository, catering to developers and enterprises alike.

How Google’s Project Gameface Differs from Apple’s Eye Tracking:

In contrast to Apple’s recent announcement of Eye Tracking for iPhone and iPad, Google’s Project Gameface utilizes head gestures and facial expressions for navigation rather than eye-tracking.

While Apple’s feature is integrated into the device’s operating software and relies on on-device capabilities, Project Gameface is an open-source code, allowing third-party app developers to integrate accessibility features into their own software independently.